How We Solved the Context Wall for Multi-Step AI Agents: A Production Journey

The Problem: When AI Agents Hit the Context Wall

We’re building multi-step AI agents at JustCopy.ai that autonomously complete complex software development tasks - the kind that require dozens of sequential steps. But we hit a major scaling challenge that many in this community probably face: token explosion.

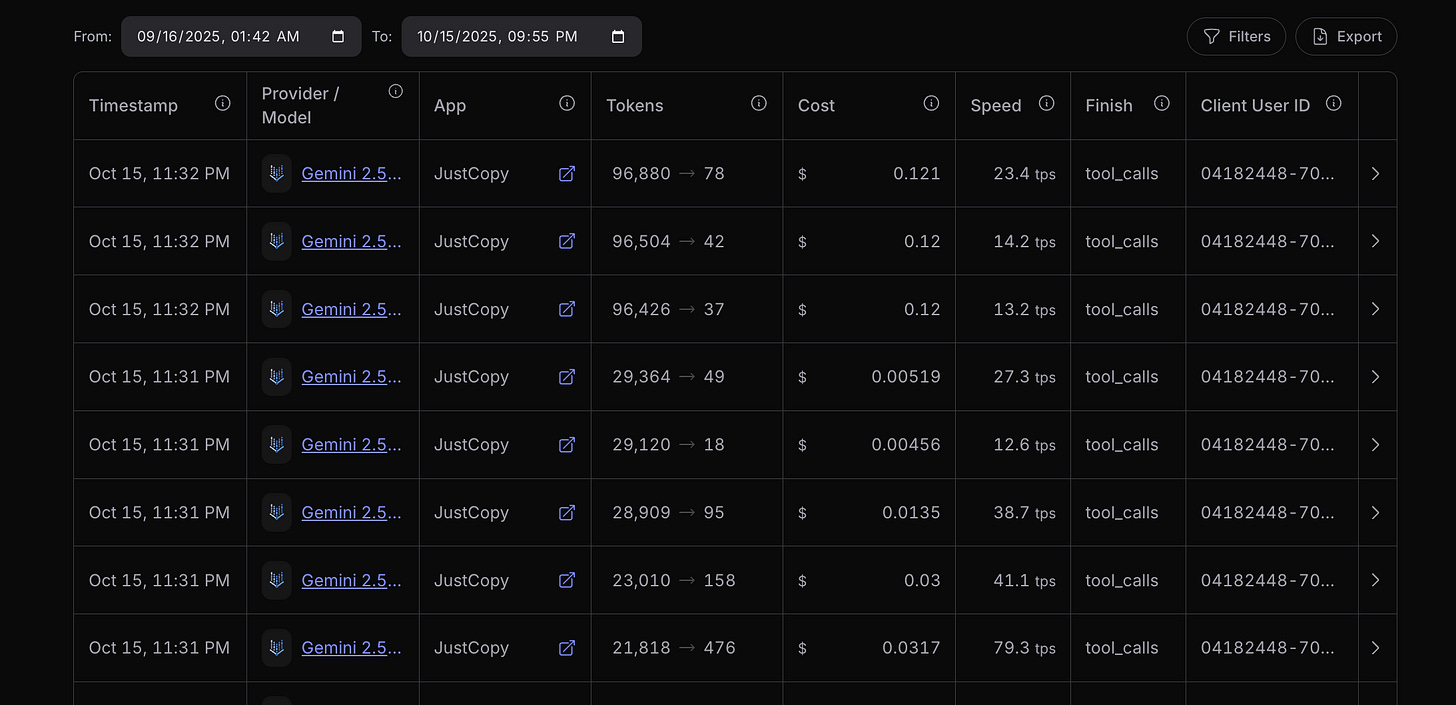

Here’s what we observed in production:

- Step 1: 3 messages, 19K tokens

- Step 10: 23 messages, 45K tokens

- Step 20: 43 messages, 95K tokens

- Step 30: 63 messages, 150K tokens

Getting expensive fast! At $1.25 per million tokens, this adds up quickly. More critically, we were approaching context limits and latency was increasing with every step.

Why This Happens

Multi-step agentic workflows create exponential context growth. Each step adds multiple messages:

- User/system instructions

- Assistant tool calls

- Tool result messages

- Model responses

Traditional solutions like sliding windows lose critical context about early decisions. We needed something smarter.

Our Solution: Two-Layer AI-Powered Compression

We implemented an intelligent compression system with two layers:

Layer 1: Message-Level Compression

Instead of dropping old messages, we use AI to summarize them. Key insight: the last 15 messages contain the agent’s current working context. Everything before that can be compressed into a high-level summary that preserves key decisions, errors encountered, and project state.

Layer 2: Tool Result Compression

Large tool outputs (file reads, directory listings) are also summarized when they exceed size thresholds, with caching to avoid re-processing the same content.

The Results: Real Production Metrics

The transformation was dramatic:

Without Compression:

- Step 10: 45K tokens, $0.056 per step

- Step 20: 95K tokens, $0.119 per step

- Step 30: 150K tokens, $0.188 per step

Before

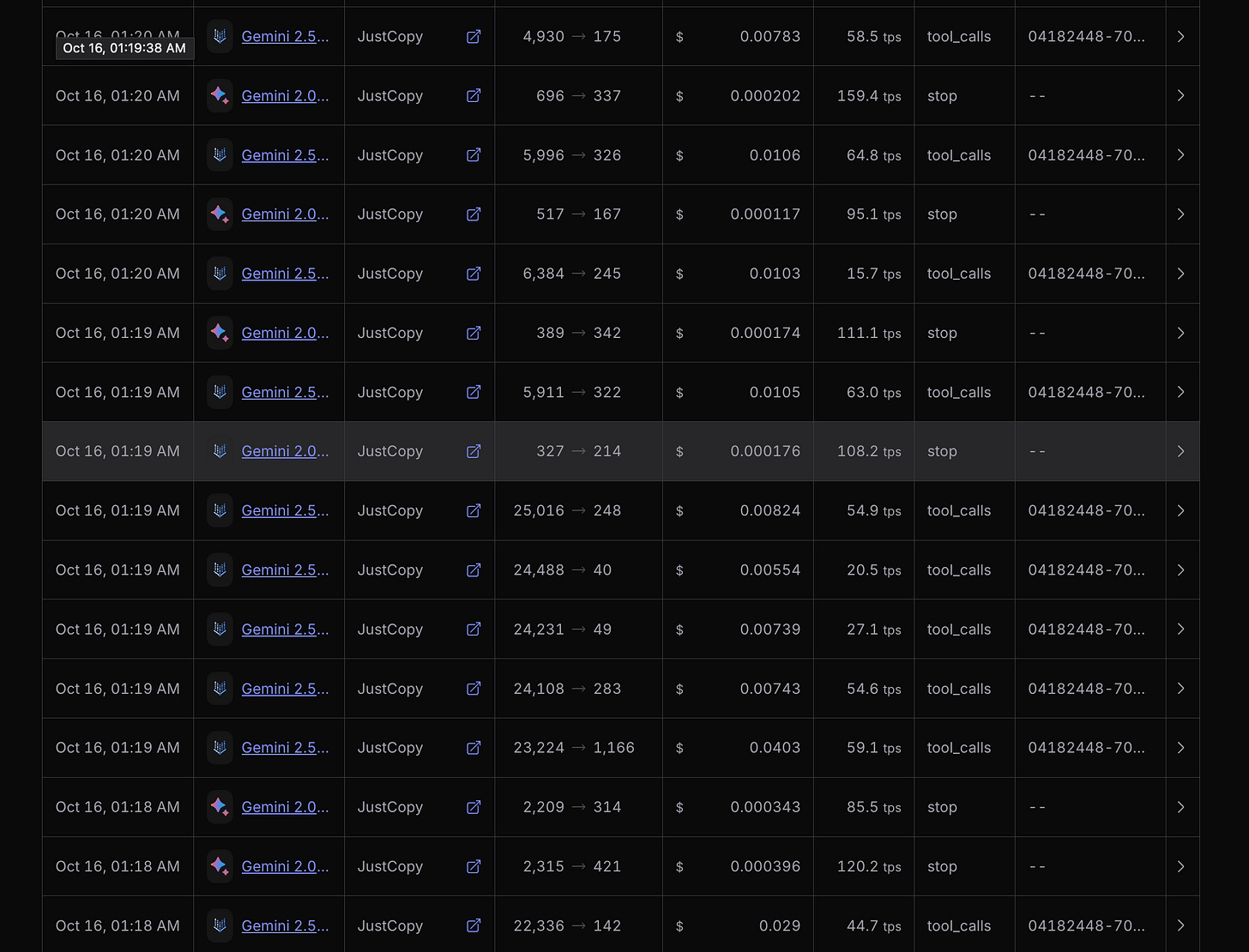

With AI Compression:

- Step 10: 28K tokens, $0.035 per step (-37% cost)

- Step 20: 30K tokens, $0.038 per step (-68% cost)

- Step 30: 31K tokens, $0.039 per step (-79% cost)

By step 30, we’re seeing 60-80% token reduction. For a 50-step agent workflow, that’s $4+ in savings per conversation.

After

Business Impact Beyond Cost Savings

This optimization directly supports our broader goals at JustCopy.ai - enabling autonomous development workflows that can scale globally. The cost efficiency allows us to offer more accessible AI-powered development tools, while the improved performance supports better SEO outcomes for generated projects and smoother deployment across different geographical regions.

Most importantly, agents that can maintain context across 50+ steps unlock entirely new use cases that weren’t feasible before due to context limits.

Key Implementation Insights

Use AI to compress context for AI - Models excel at summarization, and the summaries don’t need to be perfect, just good enough to maintain decision context.

Smart window sizing matters - We found 15 messages to be the sweet spot for preserving recent context while enabling significant compression.

Preserve what matters*- Always keep user messages, system definitions, and the most recent working context detailed.

Cost optimization - Using a cheaper model for compression costs pennies while saving dollars on the main model.

What We Learned

- Compression gets more effective as conversations grow longer

- Agent quality remained high - no accuracy loss observed

- Stage-aware filtering can reduce tokens further by only keeping relevant context

- Caching summaries eliminates redundant compression costs

- The approach scales beautifully for global deployment scenarios

The Bigger Picture

Context management isn’t just a technical challenge - it’s enabling the next generation of autonomous AI workflows. Whether you’re building development agents, content creation tools, or other multi-step systems, solving the context wall opens up entirely new possibilities.

What’s Next?

We’re exploring adaptive window sizing, semantic compression, and multi-tier caching. The key insight remains: use AI to intelligently manage AI context.